X The Everything CLI

X The Everything CLI

Introduction

I have been using and thinking about AI coding assistants for the past year and I think there is something wrong with how they work. We are quickly moving toward a paradigm where the old role of the Software Integrator, a company whose main goal is to write and maintain software for another company, is re-emerging as a major business opportunity in the U.S. software market. But instead of integrating Word Processors, Spread Sheets and Databases into existing companies, these new Software Integrators are integrating AI agents and chat assistants trained on internal enterprise data in such a way that the data does not leave the premises of the company. Whenever you hear this term (similar is CRMCustomer Relationship Management (CRM) is a technology and strategy that companies use to manage and analyze all interactions with current and potential customers. The goal of a CRM system is to enhance customer relationships, improve customer retention, and drive sales growth by organizing, automating, and synchronizing business processes.), you should feel embarrassed for the software industry, and humanity, as a whole. A Software Integrator is only necessary when the software they are integrating is not responsive enough to the user’s wishes that they can just use it out of the box.

The Rise of AI Middleware

One of the more prominent examples in this new batch of Software Integrators is Glean. The basic premise of Glean is that your software team installs Glean within your AWSAmazon Web Services (AWS) is a subsidiary of Amazon that provides on-demand cloud computing platforms and APIs to individuals, companies, and governments on a metered, pay-as-you-go basis. AWS offers various services related to networking, compute, storage, databases, machine learning, and other processing capacity via server farms distributed globally. One of its foundational services is Amazon Elastic Compute Cloud (EC2), which allows users to have virtual computers available on-demand with high availability. These virtual machines can be controlled via REST APIs, a CLI, or the AWS console, and they emulate most attributes of real computers including CPUs, GPUs, memory, storage, and networking capabilities. or AzureMicrosoft Azure is a cloud computing platform and service created by Microsoft for building, testing, deploying, and managing applications and services through Microsoft-managed data centers. Azure provides software as a service (SaaS), platform as a service (PaaS), and infrastructure as a service (IaaS), supporting many programming languages, tools, and frameworks, including both Microsoft-specific and third-party software and systems. It competes directly with Amazon Web Services (AWS) and Google Cloud Platform, offering services ranging from virtual machines and databases to AI and machine learning capabilities, serving millions of customers including startups, enterprises, and government agencies worldwide. account and connects it to your company chat (Slack, Webex, Teams, etc.), code repo (Bitbucket, Github, etc.) and document store (Google Drive, Confluence, etc.) You then have a chat bot over all of your internal company data and can gain insights from it. It will also lessen the time to train employees on parts of the company they are unfamiliar with.

There are also many companies trying to tackle the integrator role by automating the existing workflows and processes of a company like the automation company Zapier. The premise of Zapier is that you connect all of the same sources of information to Zapier and then build out workflows that represent your business processes. These automated workflows task LLMs or peices of code with collecting and modifying data, talking to customers, etc.

In both of these setups however, to get the benefit of AI in my workflows I need to get permission in order to start using them. I then have to train either myself or my co-workers on how to use them, dividing the focus of our company away from our core mission. I may have to convince management at my company that this relatively expensive and recurring SaaSSoftware as a Service (SaaS) is a cloud computing service model in which a provider delivers application software to clients while managing the required physical and software resources. SaaS is usually accessed via a web application. Unlike other software delivery models, it separates “the possession and ownership of software from its use”. SaaS use began around 2000, and by 2023 was the main form of software application deployment. product will improve efficiency. I then have to convince the other employees that yet another new tool will be time saving instead of distracting. This is somewhat mitigated by the fact that for some of these products I can talk to the product itself to figure out how it works, but many will not have the time or skill set to do this. The best companies will realize that this is a worthwhile cost, pay it, and win over time. But for the average user, AI is just the same as having a software engineer that they can ask to automate things for them - with all of the double checking, technical jargon, and miscommunication which that implies.

We have built these systems in the mirror image of the old systems. If I the customer want something, I ask the company to make it for me and then I get back something that somewhat resembles what I asked for. The loop is tighter now because of these AI tools, but it is the same loop. It also incurs the same types of cost-benefit calculations when you use them. How much of my time will it take to explain to the AI what I want? What is the likelihood that it will understand and deliver the correct product? We have democratized the ability to produce working software but the same edge cases, deep technical knowledge, and problem solving abilities are required if something goes wrong.

Since the user has not had to learn how to solve the problem from the outset, when problems inevitably arise with the scaling complexity of the codebase, they have relearn the code generated by the LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on.. This is a dificult task made worse by layers and layers of hacks that the coding assistant made to get the code to do what the user asked for. All of that “time saved” up front is now wasted on the back end.

What would a piece of software look like that would break that loop entirely? When I tell my Mom, “Hey you could use AI to automate the things you are doing at work”, this seems like an insurmountable task, partly because she hasn’t written software since college and partly because she is so busy serving clients in the current workflow that she doesn’t have the time and energy to use a new tool. How can we change this? I think the answer comes through a similar idea from content recommender algorithms like YouTube: the things people want to see in their feed are similar to the things they watch the most. In the same way, the things that people would like to automate are revealed by the tasks that they do the most with a computer.

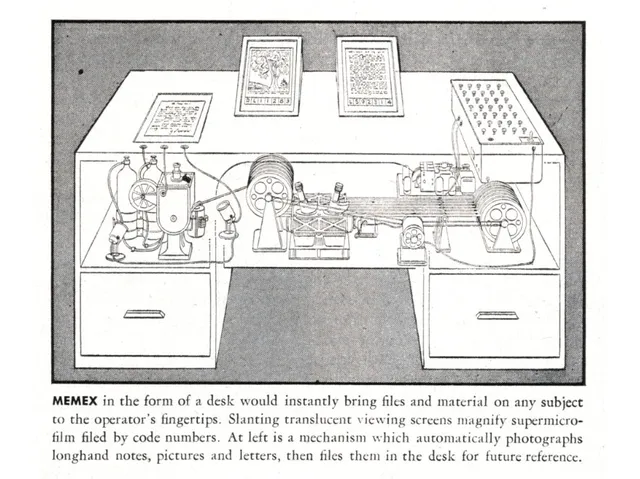

Revealed Preferences and the Memex

A few nights ago I was reading Project Xanadu: The Internet That Might Have Been, a blog post about Project XanaduProject Xanadu was the first hypertext project, founded in 1960 by philosopher and sociologist Ted Nelson, predating the World Wide Web by three decades. Nelson’s vision was for a global network of interconnected documents with two-way links, version tracking, micropayments for content creators, and the ability to see the context of any quote or reference. Unlike the Web’s one-way links that can break when pages move or disappear, Xanadu was designed with permanent, bidirectional links that maintain their connections. The system also featured “transclusion,” allowing content to be included from one document into another by reference rather than copying. While Project Xanadu never achieved widespread implementation, its concepts influenced hypertext development and continue to be studied as an alternative vision for organizing information digitally. which tried to build the Memex, a machine that could automatically organize the sea of information that scientists were swimming in after the Second World War. One of the things that struck me about this Memex idea is that the user’s own actions reveal what data should be kept and stored and later revealed to them again though “trails”. Essentially a trail is a browser history, but you can share them and create a network of research that you have done, made by the path you take through the Internet. This sort of tracking is often done on the Internet but rarely to users’ benefit. YouTube recommendations are the only thing I can think of where it actually works for you. Even with that product, you still have a hard time curating what content is served to you. Want to only be served things that make you a better person like History, Math, and Science? Sorry, your revealed preference is for drama and nonsense, guess you will be getting that til the end of time. Suffice it to say that revealed preferences can be used for good and ill, but certainly they are addictive and powerful when setup in the correct way.

So how are business processes revealed to the computer? I think in the following way: every time you use a website, traffic is sent and recorded by your browser, usually via RESTREST is a software architectural style that was created to describe the design and guide the development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of a distributed, Internet-scale hypermedia system, such as the Web, should behave. The REST architectural style emphasizes uniform interfaces, independent deployment of components, the scalability of interactions between them, and creating a layered architecture to promote caching to reduce user-perceived latency, enforce security, and encapsulate legacy systems. APIAn application programming interface (API) is a connection between computers or between computer programs. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build such a connection or interface is called an API specification. A computer system that meets this standard is said to implement or expose an API. The term API may refer either to the specification or to the implementation. calls. These take the form of GETGET is an HTTP request method used to retrieve data from a web server. It is one of the most common HTTP methods and is designed to request a representation of a specified resource without modifying it. GET requests can include parameters in the URL query string to specify what data to retrieve. Because GET requests are idempotent (making the same request multiple times produces the same result) and don’t change server state, they can be safely cached, bookmarked, and shared. GET requests are limited in the amount of data they can send (typically around 2048 characters in the URL) and should never be used for sensitive information like passwords, as the data appears in the URL., asking for some piece of information, POSTPOST is an HTTP request method used to send data to a server to create or update a resource. Unlike GET requests, POST requests include data in the request body rather than the URL, allowing for larger amounts of data to be transmitted and keeping sensitive information out of browser history and server logs. POST requests are not idempotent, meaning multiple identical requests may have different effects (such as creating multiple records). They’re commonly used for submitting forms, uploading files, and creating new resources through APIs. POST requests are not cached by default and cannot be bookmarked since the data is sent in the request body rather than the URL. updating the server with some completely new information, and PUTPUT is an HTTP request method used to update or replace an existing resource on a server, or create it if it doesn’t exist. Unlike POST, which typically creates new resources, PUT is idempotent—making the same PUT request multiple times will produce the same result. When using PUT, the client sends the complete updated representation of the resource to a specific URL. For example, a PUT request to /users/123 would replace the entire user record with ID 123 with the new data provided. In RESTful API design, PUT is preferred for updates where the client specifies the exact URI of the resource being modified. updating stale information on the server. These actions are the atoms of the web universe and any automation task over an existing website will use all of these in order to drive that automation. There may also be some massaging of data by the front-end JavaScriptJavaScript (JS) is a high-level, interpreted programming language and a core technology of the World Wide Web, alongside HTML and CSS. Created in 1995, JavaScript enables interactive web pages and is an essential part of web applications, with the vast majority of websites using it for client-side behavior. JavaScript is dynamically typed, prototype-based, and supports multiple programming paradigms including object-oriented, imperative, and functional programming. While originally limited to browsers, JavaScript has expanded to server-side development through platforms like Node.js, and is now used for mobile app development, desktop applications, and even embedded systems. Its event-driven nature and asynchronous capabilities make it particularly well-suited for handling user interactions and real-time updates., looking up things directly from a database etc., but if the webpage has exposed it to the user then it will likely happen through RESTREST is a software architectural style that was created to describe the design and guide the development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of a distributed, Internet-scale hypermedia system, such as the Web, should behave. The REST architectural style emphasizes uniform interfaces, independent deployment of components, the scalability of interactions between them, and creating a layered architecture to promote caching to reduce user-perceived latency, enforce security, and encapsulate legacy systems. APIAn application programming interface (API) is a connection between computers or between computer programs. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build such a connection or interface is called an API specification. A computer system that meets this standard is said to implement or expose an API. The term API may refer either to the specification or to the implementation. calls.

State of the Art

The “AI native” solution to automating work, has been to reveal the concept of a MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. (Model Context Protocol) endpoint on most APIs on the internet so that LLMs can more easily call these APIs. MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. is an endpoint on the companies’ server that takes some structured text (JSONJSON is an open standard file format and data interchange format that uses human-readable text to store and transmit data objects consisting of name–value pairs and arrays. It is a commonly used data format with diverse uses in electronic data interchange, including that of web applications with servers.) and uses the existing endpoints to make APIAn application programming interface (API) is a connection between computers or between computer programs. It is a type of software interface, offering a service to other pieces of software. A document or standard that describes how to build such a connection or interface is called an API specification. A computer system that meets this standard is said to implement or expose an API. The term API may refer either to the specification or to the implementation. calls on behalf of the LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on.. This setup has several limitations. First, you haven’t really automated anything; the LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. still has a probabilistic chance of using the MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. endpoint correctly. Second, LLMs have a hard time reasoning which of these MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. tools to use, so tools that were created directly by the model companies work much better than third party ones. This creates a second class citizen problem where any tool that OpenAI puts in its model pre-training is likely to be used correctly and any tool you make with MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. is unlikely to work reliably, meaning that OpenAI has a dogged advantage in their own ecosystem.

After providing about 10 tools to the LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on., the likelihood that the model will choose the correct tool to use goes down dramatically. At a higher level, this is just a replication of the existing workflow that humans do, made slightly easier for our faster thinking friends, the LLMs, to use. LLMs may be significantly cheaper on an individual run to use, but given how error-prone they are, they end up being pretty expensive, especially if you scale them up to serve your entire customer base. If you don’t have a “pay as you go” profit scheme then you have to either hope that your users use the service less than your usage costs (See Movie Pass for how this can go wrong in a pretty obvious way, since you are effectively subsidizing OpenAI or the movie theaters in the Movie Pass case), or you need to raise your prices to account for this and you end up being at least as expensive as the model companies (200 bucks a month is a high price for most consumer facing applications).

Reaching for Xanadu

What I want the AI to do is to figure out what I am doing that is successful and automate that in computer code rather than high level text. Once my workflow is established, why should I need a subscription to OpenAI at all? If you “Go all in on AI” as a company you have effectively signed yourself up to vendor lock-in very similar to the lock-in that cloud computing has. This is slightly different since as far as I can tell, the base model from OpenAI is roughly as good as from Anthropic, and switching them out is just changing the URLA uniform resource locator (URL), colloquially known as an address on the Web, is a reference to a resource that specifies its location on a computer network and a mechanism for retrieving it. A URL is a specific type of Uniform Resource Identifier (URI), although many people use the two terms interchangeably. URLs occur most commonly to reference web pages (HTTP/HTTPS) but are also used for file transfer (FTP), email (mailto), database access (JDBC), and many other applications. and credit card info you have setup to serve your LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. requests within your company. However, you are still locked in to the entire idea that you need a LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. to run your company. When the model companies inevitably consolidate in the future, there will be much less choice on which models you can use. Your profits will be eaten up by the remaining monopolistic company.

LLMs have the benefit of being able to deal with a lot of situations out of the box and so they are incredibly useful, but what we want is to have them figure out what to do and encode it in a format that is cheaper to run, does not rely on third parties, and is easier to debug if something goes wrong, i.e. computer code. If we can find a way to combine probabilistic code generation with deterministic code generation such that the user does not need a degree in Computer Science to get halfway decent results, then we could eat the lunch of all of the software integrators without resigning our freedom and profit margins to OpenAI and Microsoft, or similarly Anthropic and AWS.

How can we accomplish this? I think the answer is the Automated Command Line Interface. We want build a personalized command line interface for the user that is based on their usage of the Internet. Let’s say the user is throwing a pizza party, the user goes to Papa John’s website, where we would see them get all of the current pizza deals, make some selections on the page to add to their cart and finally checkout with their address and credit card information. All the while, RESTREST is a software architectural style that was created to describe the design and guide the development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of a distributed, Internet-scale hypermedia system, such as the Web, should behave. The REST architectural style emphasizes uniform interfaces, independent deployment of components, the scalability of interactions between them, and creating a layered architecture to promote caching to reduce user-perceived latency, enforce security, and encapsulate legacy systems. requests to Papa John’s server are being made for: GETGET is an HTTP request method used to retrieve data from a web server. It is one of the most common HTTP methods and is designed to request a representation of a specified resource without modifying it. GET requests can include parameters in the URL query string to specify what data to retrieve. Because GET requests are idempotent (making the same request multiple times produces the same result) and don’t change server state, they can be safely cached, bookmarked, and shared. GET requests are limited in the amount of data they can send (typically around 2048 characters in the URL) and should never be used for sensitive information like passwords, as the data appears in the URL.ting the webpage, POSTPOST is an HTTP request method used to send data to a server to create or update a resource. Unlike GET requests, POST requests include data in the request body rather than the URL, allowing for larger amounts of data to be transmitted and keeping sensitive information out of browser history and server logs. POST requests are not idempotent, meaning multiple identical requests may have different effects (such as creating multiple records). They’re commonly used for submitting forms, uploading files, and creating new resources through APIs. POST requests are not cached by default and cannot be bookmarked since the data is sent in the request body rather than the URL.ing the desired pizza to the cart, and POSTPOST is an HTTP request method used to send data to a server to create or update a resource. Unlike GET requests, POST requests include data in the request body rather than the URL, allowing for larger amounts of data to be transmitted and keeping sensitive information out of browser history and server logs. POST requests are not idempotent, meaning multiple identical requests may have different effects (such as creating multiple records). They’re commonly used for submitting forms, uploading files, and creating new resources through APIs. POST requests are not cached by default and cannot be bookmarked since the data is sent in the request body rather than the URL.ing the credit card info. So, our program would break each of these requests down into Skills get_menu, add_pizza_to_cart, checkout_cart and write the meta skill of give_me_papa_johns_pizza which does all three.

We encode this process in PythonPython is a high-level, general-purpose programming language created by Guido van Rossum and first released in 1991. Its design philosophy emphasizes code readability and simplicity through the use of significant indentation (whitespace) to define code blocks instead of braces or keywords. Python is dynamically typed (types are checked at runtime) and features automatic garbage collection for memory management. It supports multiple programming paradigms including object-oriented, functional, and procedural programming. Python’s extensive standard library and vast ecosystem of third-party packages make it popular for web development, data science, machine learning, automation, scientific computing, and scripting. Its clear syntax and gentle learning curve have made it one of the most widely-used programming languages for both beginners and professionals. code with a decoratorA decorator in programming refers to a design pattern or language feature that allows behavior to be added to individual objects or functions without modifying their structure. In Python, decorators are a particularly powerful feature that use the @ symbol to wrap functions or methods with additional functionality. For example, a decorator can add logging, timing, authentication, or caching to a function. Decorators work by taking a function as input and returning a modified version of that function. In object-oriented programming, the decorator pattern allows functionality to be extended at runtime by wrapping objects with decorator objects that add new behaviors while maintaining the same interface as the original object. that tracks where the skill is in our codebase and reveals it to our automated command line interface so that we can use it later. Then, we let the user know that they have a new skill available. If the user continues to use the browser to do other business operations that involve ordering a pizza, for example setting up a pizza party, we can combine the order_paper_plates, order_plastic_utensils, order_party_hats, check_attendees_schedule commands into a new uber command to setup_pizza_party that would ask for the email list involved and order the appropriate amount of pizza to the right conference room and notify the user that they have a new Setup Pizza Party skill available.

Note that determining where a Skill starts and stops is not too important. Since we are in a regime where computer code is cheap to write and maintain, we should have as much of it as we possibly can, leaving what skills to use and reuse to the user. More on this later.

The user is screaming at us what they want to do and how, but because we have not had the ability to really listen until now, only CEOs and Software Engineers could really utilize the power of computers. The rest of the world is still in the typewriter era, with a better screen attached.

When you get these skills and skill acquisition going, business becomes like a video game instead of drudgery. To automate our testing pipeline at work (which took me a few weeks of figuring out what every step in our web interface was and how to massage the data to use each piece), I could instead just use our web tool, like a human would, and have our testing pipeline automated, I would feel like I had superpowers. Once that automation was done, using the same structure I described above, I already felt really powerful, but that feeling was tempered by the knowledge that if we add more steps to the user’s workflow, I will need to go and update the testing pipeline. There are other techniques you could use to create this automation process, for example parsing your SpringBootSpring Boot is an open-source Java-based framework used to create stand-alone, production-grade Spring applications with minimal configuration. Built on top of the Spring Framework, it simplifies the development process by providing auto-configuration, embedded servers (like Tomcat or Jetty), and production-ready features out of the box. Spring Boot eliminates much of the boilerplate code and XML configuration traditionally required in Spring applications, allowing developers to quickly build microservices and web applications. It follows an opinionated approach with sensible defaults while still allowing customization, and has become one of the most popular frameworks for building enterprise Java applications and RESTful APIs. server code-base and generating code based on the results, but we should remember that the vast majority of people at any given company have no ability to something like this, and an individual employee is removed from their Software Department at such a distance that they don’t know what automation they would want if they could ask for it. Add in Software Development timelines and the problem is exacerbated such that most people won’t even ask.

The great lesson of Capitalism and Federalism is that the further you can distribute the capabilities to make powerful decisions down to the person who has the most relevant information, (the store owner, local government, etc.) the better decisions get made. In most American companies that have a Software Department, there is a kind of Communism in place much like the utopian vision of the Cybernetics movement of the 1980’s in the Soviet Union. The great dream of Cybernetics is that we have a central department that uses its advanced computerized techniques and central data to make better decisions on behalf of the rest of the company/country. This was mainly necessary in corporate America because of the extreme time and expense that it takes to get good at computers and writing code. Some of this has been mitigated by the advent of the Graphical User Interface and later the Web Browser, but these better interfaces have mostly been used to provide very structured steps for the user. Computers are powerful because of the composability of their operations. So far this composability has not been exposed to the average person, and I think the above scheme could change that.

Skill Discovery and Ranking

But to back up, so far we have a loop that will build higher and higher levels of abstraction in the business processes that we want to automate. We still have the same issue that a scheme like MCPModel Context Protocol (MCP) is an open protocol introduced by Anthropic that enables AI models to securely interact with external data sources and tools. MCP provides a standardized way for large language models to connect to various resources like databases, APIs, and file systems through a consistent interface. It works by exposing server endpoints that accept structured requests (typically in JSON format) and translate them into appropriate actions using existing APIs. This allows AI assistants to access real-time information and perform actions beyond their training data, while maintaining security and control over what resources the model can access and how it can use them. has - too many skills and no way to tell when one of those skills is relevant to the problem we are currently facing. If we want to give decision making access to a LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. how do we present to it under the ten skill threshold where it will be able to call the appropriate skill for the job? I think we should use a combination of a EloThe Elo rating system is a method for calculating the relative skill levels of players in competitive games, originally developed for chess by physicist Arpad Elo. In this system, each player has a numerical rating that goes up when they win and down when they lose, with the amount of change depending on the difference between the players’ ratings and the expected outcome. If a lower-rated player beats a higher-rated one, their rating increases more than if they beat someone of equal or lower rating. The Elo system has been adapted for use in many competitive contexts beyond chess, including video games, sports rankings, and machine learning model evaluation. system and vector embeddings to quickly retrieve the relevant code and skill to use.

By the user’s own usage of a skill, the more useful skills will be strengthened in their EloThe Elo rating system is a method for calculating the relative skill levels of players in competitive games, originally developed for chess by physicist Arpad Elo. In this system, each player has a numerical rating that goes up when they win and down when they lose, with the amount of change depending on the difference between the players’ ratings and the expected outcome. If a lower-rated player beats a higher-rated one, their rating increases more than if they beat someone of equal or lower rating. The Elo system has been adapted for use in many competitive contexts beyond chess, including video games, sports rankings, and machine learning model evaluation. ranking and will be more likely to be displayed to the LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. when it is making new skills or using them. In the same way that neural pathways are strengthened through their repeated use and a certain memory may be associated with another through their frequent juxtaposition, our skills that are used often will be near the top of the list of skills that an LLMA large language model (LLM) is a language model trained with self-supervised machine learning on a vast amount of text, designed for natural language processing tasks, especially language generation. The largest and most capable LLMs are generative pre-trained transformers (GPTs) and provide the core capabilities of chatbots such as ChatGPT, Gemini and Claude. LLMs can be fine-tuned for specific tasks or guided by prompt engineering. These models acquire predictive power regarding syntax, semantics, and ontologies inherent in human language corpora, but they also inherit inaccuracies and biases present in the data they are trained on. can use when it is writing code for a new skill, and skills that are frequently used in quick succession should quickly have a meta-level skill developed that calls all of them together.

In psychology this is the branding effect. As a child, you may like polar bears (scary!) and like sugar (tasty!), but there is no association between them in your mind. After many Christmas ads where the polar bears are drinking Coke, you slowly build that association, so that whenever you see a polar bear in the zoo or in an ad you instantly think of Coke, or even more powerfully you begin to associate the end of the year, being with family and the entire Christmas season with Coke.

If you could write an algorithm that behaves in a similar way, it does not matter how many associations that you might make, or skills you might acquire, since the relevant ones will bubble to the top by their frequent usage.

The Missing Lobe

If we think about the two system thinking, from Thinking Fast and Slow, where System One is fast automatic and stereotypical thinking, and System Two is slow learning and thinking things through carefully, right now we have a good proxy for System Two in the LLMs, but have no standard System One for computers. Regardless if this project works out, we should think about that paradigm and how to build a System One for computers since that is clearly what is missing right now. If you had to relearn how to drive a car every time you went to the grocery store (learning is associated with System Two), you’d get in a lot more accidents. Think about how scary it was to learn to drive in the first place and how effortless it becomes after only a few short hours (In my home state it only takes 60 hours to get a driver’s license once you have the learners’ permit).

I suspect that what happens to make human learning so efficient is not that silicon neural networks are tremendously less efficient at learning a task from zero than the chemical brain (though there definitely is an argument for this because the brain does fundamentally different math to achieve its results), but the brain has a way of composing meta-skills together at higher and higher levels of abstraction that we have largely ignored. When a person learns to drive a car they are thinking about the wheel and the clutch and the brakes as different objects. They are pretty poor to start off with. When they learn the skills individually and combine them as a meta-skill they can learn the entire system faster than a neural network which starts with the system all mixed up and pre-combined (See The Fractured Entangled Representation Hypothesis).

Skill Recall

I think the final missing piece to our automated skill acquisition system is this: how can we efficiently store and search through all of the skills that we generate? We talked a little bit about sorting them by their usage, which is good, but where is the code stored? How are we going to label each command? You could just use a PostgresPostgreSQL also known as Postgres, is a free and open-source relational database management system (RDBMS) emphasizing extensibility and SQL compliance. PostgreSQL features transactions with atomicity, consistency, isolation, durability (ACID) properties, automatically updatable views, materialized views, triggers, foreign keys, and stored procedures. It is supported on all major operating systems, including Windows, Linux, macOS, FreeBSD, and OpenBSD, and handles a range of workloads from single machines to data warehouses, data lakes, or web services with many concurrent users. DBA database (DB) is an organized collection of structured data stored electronically in a computer system. Databases are typically controlled by a database management system (DBMS), which allows users to create, read, update, and delete data efficiently. Common types include relational databases (using tables with rows and columns), NoSQL databases (for unstructured data), and graph databases. Databases are essential for storing and managing information in virtually all modern applications, from websites to enterprise systems, enabling quick data retrieval, concurrent access by multiple users, and maintaining data integrity through various mechanisms. that stores five columns. The first column is the python code that makes up the skill. The second column is the id’s of the other skills that are called by this skill. The third column is the EloThe Elo rating system is a method for calculating the relative skill levels of players in competitive games, originally developed for chess by physicist Arpad Elo. In this system, each player has a numerical rating that goes up when they win and down when they lose, with the amount of change depending on the difference between the players’ ratings and the expected outcome. If a lower-rated player beats a higher-rated one, their rating increases more than if they beat someone of equal or lower rating. The Elo system has been adapted for use in many competitive contexts beyond chess, including video games, sports rankings, and machine learning model evaluation. ranking of the skill. The fourth column is a list of words that describe the skill. The fifth column is the location in the code base where the python code exists. With that information you may be able to build a reliable skill acquisition and retrieval system assuming that you use something like libCSTLibCST is a Python library developed by Instagram (Meta) for parsing Python code into a Concrete Syntax Tree (CST), which preserves all formatting details including whitespace, comments, and parentheses. Unlike Abstract Syntax Trees (ASTs) which lose formatting information, CSTs maintain the exact structure of the original code, making LibCST ideal for building tools that modify Python source code while preserving its style and layout. It’s commonly used for automated code refactoring, code generation, linting tools, and codemods that need to make programmatic changes to codebases while maintaining readability and existing code conventions. for the code transformations. When making a meta-skill you’d also need to recursively look at the sub-skills’ descriptions to make a new description, probably including information about when the user wanted to use the skill and for what reason (this can be a guess at first and refined over subsequent usage of the skill).

Conclusion

What I am arguing for is not another layer of SaaSSoftware as a Service (SaaS) is a cloud computing service model in which a provider delivers application software to clients while managing the required physical and software resources. SaaS is usually accessed via a web application. Unlike other software delivery models, it separates “the possession and ownership of software from its use”. SaaS use began around 2000, and by 2023 was the main form of software application deployment. middleware bloat, not another “Integrator” who sits between me and the machine, but a rethinking of how computers should respond to people. We don’t need AI that mimics the bureaucracy of a Software Department, we need AI that listens closely enough to our revealed behavior to translate it directly into tools. Molding our tools to the shape of the brain instead of forcing the brain to think like a computer. Every click, every RESTREST is a software architectural style that was created to describe the design and guide the development of the architecture for the World Wide Web. REST defines a set of constraints for how the architecture of a distributed, Internet-scale hypermedia system, such as the Web, should behave. The REST architectural style emphasizes uniform interfaces, independent deployment of components, the scalability of interactions between them, and creating a layered architecture to promote caching to reduce user-perceived latency, enforce security, and encapsulate legacy systems. call, every repetitive sequence of steps we take online is a blueprint for automation. If those patterns could be turned into skills that are composable, searchable, and personalized we would finally have a system that empowers the end user instead of locking them in.

The Everything CLIA Command Line Interface (CLI) is a text-based user interface used to interact with software and operating systems by typing commands into a console or terminal. Unlike graphical user interfaces (GUIs) that use windows, icons, and menus, CLIs require users to enter specific text commands to perform tasks. CLIs are favored by developers, system administrators, and power users for their efficiency, scriptability, and precise control over system operations. They allow for automation through shell scripts and often provide more functionality than their GUI counterparts, especially for complex or repetitive tasks. is not about chasing yet another platform war between OpenAI, Anthropic, Microsoft, or AWS. It’s about building the missing System One for computers, the fast, automatic layer that captures what we already know how to do and makes it available to us as code. If we succeed, the role of the “Software Integrator” disappears, because every person becomes their own integrator. Work starts to feel less like drudgery and more like play, and we stop asking permission from central authorities to make our own tools. Code produced at the speed of thought, the promise of computing realized.

I’ll leave you with this:

“The dogmas of the quiet past are inadequate to the stormy present. The occasion is piled high with difficulty and we must rise with the occasion. As our case is new, we must think anew and act anew. We must disenthrall ourselves, and then we shall save our country.” – Abraham Lincoln